- #How to install apache spark on ubuntu how to#

- #How to install apache spark on ubuntu drivers#

- #How to install apache spark on ubuntu driver#

- #How to install apache spark on ubuntu download#

- #How to install apache spark on ubuntu windows#

#How to install apache spark on ubuntu windows#

Step 3: Open the environment variable on the laptop by typing it in the windows search bar. Step 2: Open the downloaded Java SE Development Kit and follow along with the instructions for installation. Java installation is one of the mandatory things in spark. Apache Spark is developed in Scala programming language and runs on the JVM.

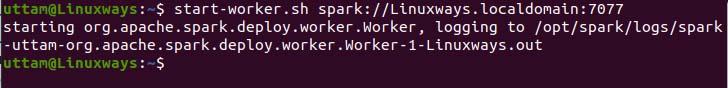

In This article, we will explore Apache Spark installation in a Standalone mode.

#How to install apache spark on ubuntu drivers#

#How to install apache spark on ubuntu driver#

#How to install apache spark on ubuntu how to#

How to find top-N records using MapReduce.Hadoop - Features of Hadoop Which Makes It Popular.Hadoop Streaming Using Python - Word Count Problem.Introduction to Hadoop Distributed File System(HDFS).MapReduce Program - Weather Data Analysis For Analyzing Hot And Cold Days.Difference between Hadoop 1 and Hadoop 2.Matrix Multiplication With 1 MapReduce Step.How to Execute WordCount Program in MapReduce using Cloudera Distribution Hadoop(CDH).ISRO CS Syllabus for Scientist/Engineer Exam.ISRO CS Original Papers and Official Keys.GATE CS Original Papers and Official Keys.

Turtles all the way down! It’d be cool to have that up on the cloud as a “hardcore” (pardon my punning), zero-trust-security hardened, alternative way to run all the things. With this approach, we will build your own highly available, fully distributed MicroK8s powered Kubernetes cluster. The next step is going to be all about banding together a bunch of these Ubuntu Core VM instances using LXD clustering and a virtual overlay fan network. But in Part 4, we’ll take this still further. Ok, we didn’t do anything very advanced with our Spark cluster in the end. If all goes well, you’ll be able to launch a Spark cluster, connect to it, and execute a parallel calculation when you run the stanza. In the newly opened Jupyter tab of your browser, create and launch a new iPython notebook, and add the following Python script. Now you should be able to browse to Jupyter using your workstation’s browser, nice and straightforward. Ssh -L 8888:$UK8S_IP:$J_PORT ssh Landing on Jupyter: testing our Spark cluster from a Jupyter notebook Yep, it’s time to open another terminal and run the following commands so we can set up a tunnel to help us to do that: GCE_IIP=$(gcloud compute instances list | grep ubuntu-core-20 | awk '') We will use an SSH tunnel to push the image to our remote private registry on MicroK8s. Let’s build that container image so that we can launch it on our Ubuntu Core hosted MicroK8s in the sky: sudo apt install docker.io So Apache Spark runs in OCI containers on Kubernetes. # Specify the User that the actual main process will run asĮOF Containers: the hottest thing to hit 2009

#How to install apache spark on ubuntu download#

Call to hacktion: adapting the Spark Dockerfileįirst, let’s download some Apache Spark release binaries and adapt the dockerfile so that it plays nicely with pyspark: wget Ĭhgrp root /etc/passwd & chmod ug+rw /etc/passwd & \ĬOPY kubernetes/dockerfiles/spark/entrypoint.sh /opt/ĬOPY kubernetes/dockerfiles/spark/decom.sh /opt/ Let’s take a look at getting Apache Spark on this thing so we can do all the data scientist stuff. If so, you can exit any SSH session to your Ubuntu Core in the sky and return to your local system. If you’ve followed the steps in Part 1 and Part 2 of this series, you’ll have a working MicroK8s on the next-gen Ubuntu Core OS deployed, up, and running on the cloud with nested virtualisation using LXD.

0 kommentar(er)

0 kommentar(er)